Meta has announced its SAM 3 line of artificial intelligence models, offering a decisive step forward. In this latest group of large language models, the firm has bundled several long-sought features, among them the ability to guide the system with brief lines of text and to draw on suggested prompts when the user is unsure where to begin.

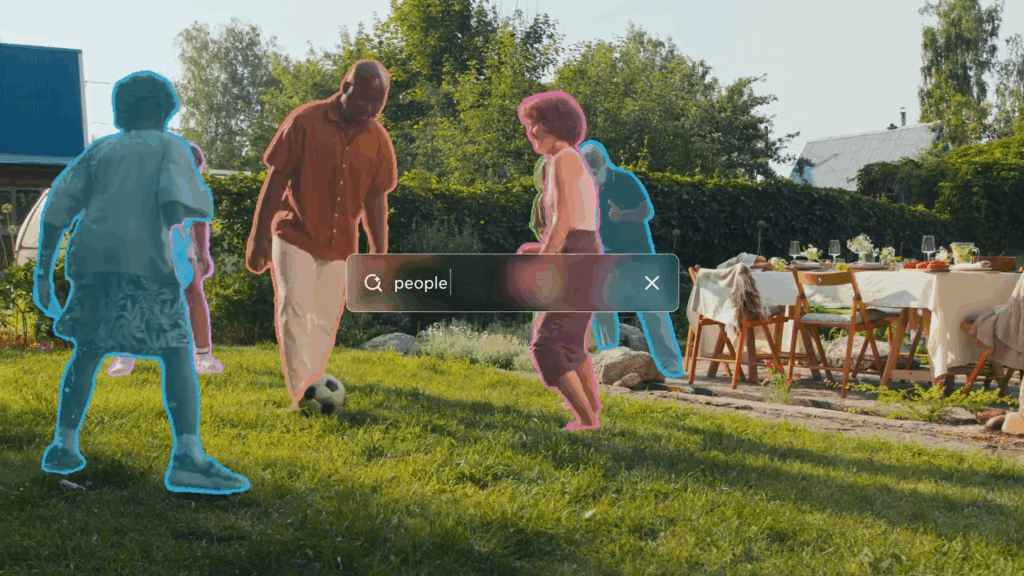

The models can now examine a still image, pick out each element with care, and raise a rough three-dimensional form of any object or person inside it. In recorded scenes, they can follow human figures and moving things alike, marking them out with steady precision. As with earlier versions, these models remain open to inspection, free to download, and ready to run on ordinary machines.

Deep Dive Into Meta’s Latest SAM 3 and SAM 3D Innovations

In a blog post, the Menlo Park company laid out the workings of the new series. There are three models altogether. SAM 3 handles tracking and segmentation for images and video; SAM 3D Objects focuses on identifying items and shaping 3D renderings of them; and SAM 3D Bodies extends that craft to human forms, allowing a full scan to be built from a single picture.

Text-Guided Segmentation and Advanced Object Tracking

SAM 3 is a continuation of the previous models, with the addition of the ability to draw out objects with the help of simple lines of text, such that regular language is sufficient to help direct its eye through the images or moving scenes. Where the older systems required one to point, click or single out an area with their eyes, this one responds to simple descriptions, such as a blue cap, a yellow bus and generates segmentation mask to each figure that matches the words.

Meta notes that this had been long requested by those who were using the open-source tools. To handle this, the model uses a single, consistent design, which consists of a perception encoder and detection and tracking mechanisms, which enables it to process pictures and video with minimal effort on the part of the user. Simultaneously, SAM 3D also brings in the capability to elevate a three dimensional shape out of a single two dimensional picture.

New 3D Reconstruction Engine for Realistic Mesh Generation

It works with concealed edges, congested space, and all the chaos of actual environment, creating meshes and textured surfaces with a steady hand. This is achieved by the step-by-step training and a new engine created to organize and shape 3D data, which allows it to transform the flat images into detailed and solid reconstructions.

Open-Source Availability and Community Access on GitHub & Hugging Face

Both models may be obtained through Meta’s pages on GitHub and Hugging Face, or taken straight from the company’s own announcements. They are released under the SAM Licence, a particular set of terms owned by Meta and written for these models alone, permitting their use in both study and commercial work.

Beyond placing the tools in the hands of the broader open-source community, Meta has opened the Segment Anything Playground, an online space where anyone may try the models without installing them or preparing a machine to run them. It is free to enter and requires nothing more than a browser.

Integration into Instagram, Meta AI, and Facebook Marketplace

Meta is also weaving these systems into its own products. Instagram’s Edits app is slated to receive SAM 3, granting creators the power to apply new effects to chosen figures or objects within their videos. The same ability is being added to Vibes in the Meta AI app and on the web. Meanwhile, SAM 3D now underpins the View in Room function on Facebook Marketplace, allowing shoppers to see how a piece of home décor might look and sit in their own rooms before they decide to buy.

Final Words

Text invitations to segmentation and flat images to three-dimensional reconstruction are not showbiz parlor features. They are actually useful functions that developers and creators have been nagging Meta to provide. It is worth applauding the company to ensure that everything remains open-source, although cynics may question their motives behind such a move, whether it is out of goodwill or a strategic move.

In any case, the outcome is identical: any person who has a laptop and an idea can now make experiments with the technology that would have appeared like a science fiction ten years ago. It is yet to be seen whether SAM 3 will be used to help some sell a couch on Marketplace or to drive the next breakthrough in medical imaging. In the meantime, Meta has merely enabled the availability of advanced computer vision and that is revolutionary.